此文档用于记录kube-prometheus的部署过程

环境:

Kubernetes1.14.3、 安装方式:二进制

kube-prometheus-0.3

兼容性参考

| kube-prometheus堆栈 |

Kubernetes 1.14 |

Kubernetes 1.15 |

Kubernetes 1.16 |

Kubernetes 1.17 |

Kubernetes 1.18 |

release-0.3 |

✔ |

✔ |

✔ |

✔ |

✗ |

release-0.4 |

✗ |

✗ |

✔ (v1.16.5 +) |

✔ |

✗ |

release-0.5 |

✗ |

✗ |

✗ |

✗ |

✔ |

HEAD |

✗ |

✗ |

✗ |

✗ |

✔ |

前期准备

下载

1

2

|

$ wget https://github.com/coreos/kube-prometheus/archive/v0.3.0.tar.gz

$ tar -zxvf v0.3.0.tar.gz

|

配置NodePort

为了Kubernetes集群外访问,可以根据情况配置NodePort或者ingress,此处直接修改为NodePort。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

$ cd kube-prometheus-0.3.0/manifests

$ vim prometheus-service.yaml

......

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 39090

......

$ vim grafana-service.yaml

......

type: NodePort

ports:

- name: http

port: 3000

nodePort: 33000

......

$ vim alertmanager-service.yaml

......

type: NodePort

ports:

- name: web

port: 9093

nodePort: 39093

.......

|

安装

1

2

3

4

5

6

7

8

9

10

11

12

13

|

$ cd kube-prometheus-0.3.0/manifests/setup

$ kubectl apply -f .

namespace/monitoring created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

service/prometheus-operator created

serviceaccount/prometheus-operator created

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

|

$ cd kube-prometheus-0.3.0/manifests

$ kubectl apply -f .

alertmanager.monitoring.coreos.com/main created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-pods created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

role.rbac.authorization.k8s.io/kube-state-metrics created

rolebinding.rbac.authorization.k8s.io/kube-state-metrics created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io configured

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader configured

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-operator created

prometheus.monitoring.coreos.com/k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

|

查看状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 1h

alertmanager-main-1 2/2 Running 0 1h

alertmanager-main-2 2/2 Running 0 1h

grafana-5db74b88f4-wl4gv 1/1 Running 0 1h

kube-state-metrics-54f98c4687-7796f 3/3 Running 0 1h

node-exporter-s9j2q 2/2 Running 0 1h

node-exporter-sjtpz 2/2 Running 0 1h

node-exporter-wgh7z 2/2 Running 0 1h

node-exporter-wrn9c 2/2 Running 0 1h

prometheus-adapter-8667948d79-dwblv 1/1 Running 0 1h

prometheus-k8s-0 3/3 Running 1 1h

prometheus-k8s-1 3/3 Running 1 1h

prometheus-operator-5f759fd859-7xs76 1/1 Running 0 1h

|

问题处理

安装完成之后,在使用过程中可能会发现有些问题,这里记录几个常见问题

问题一

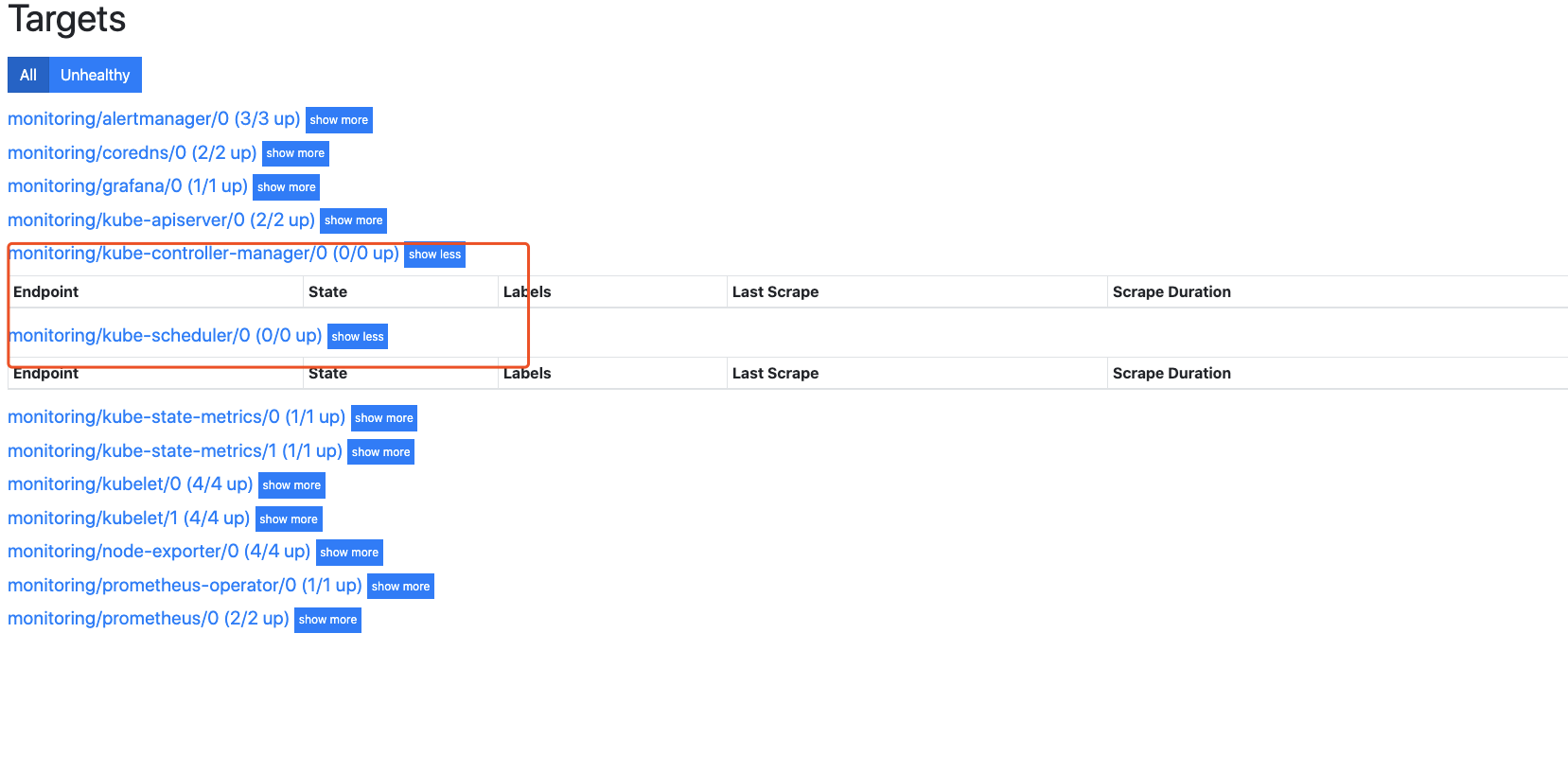

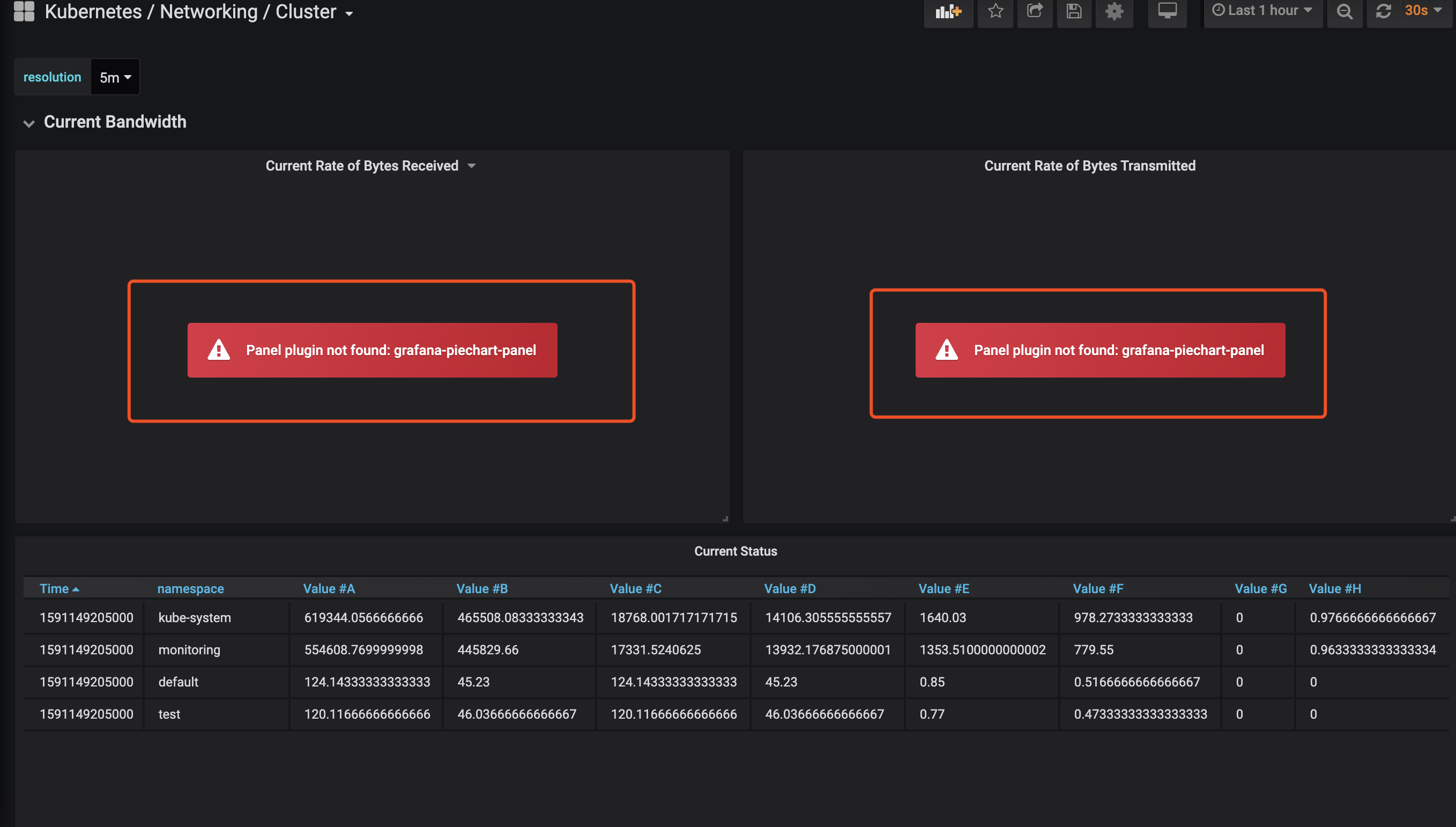

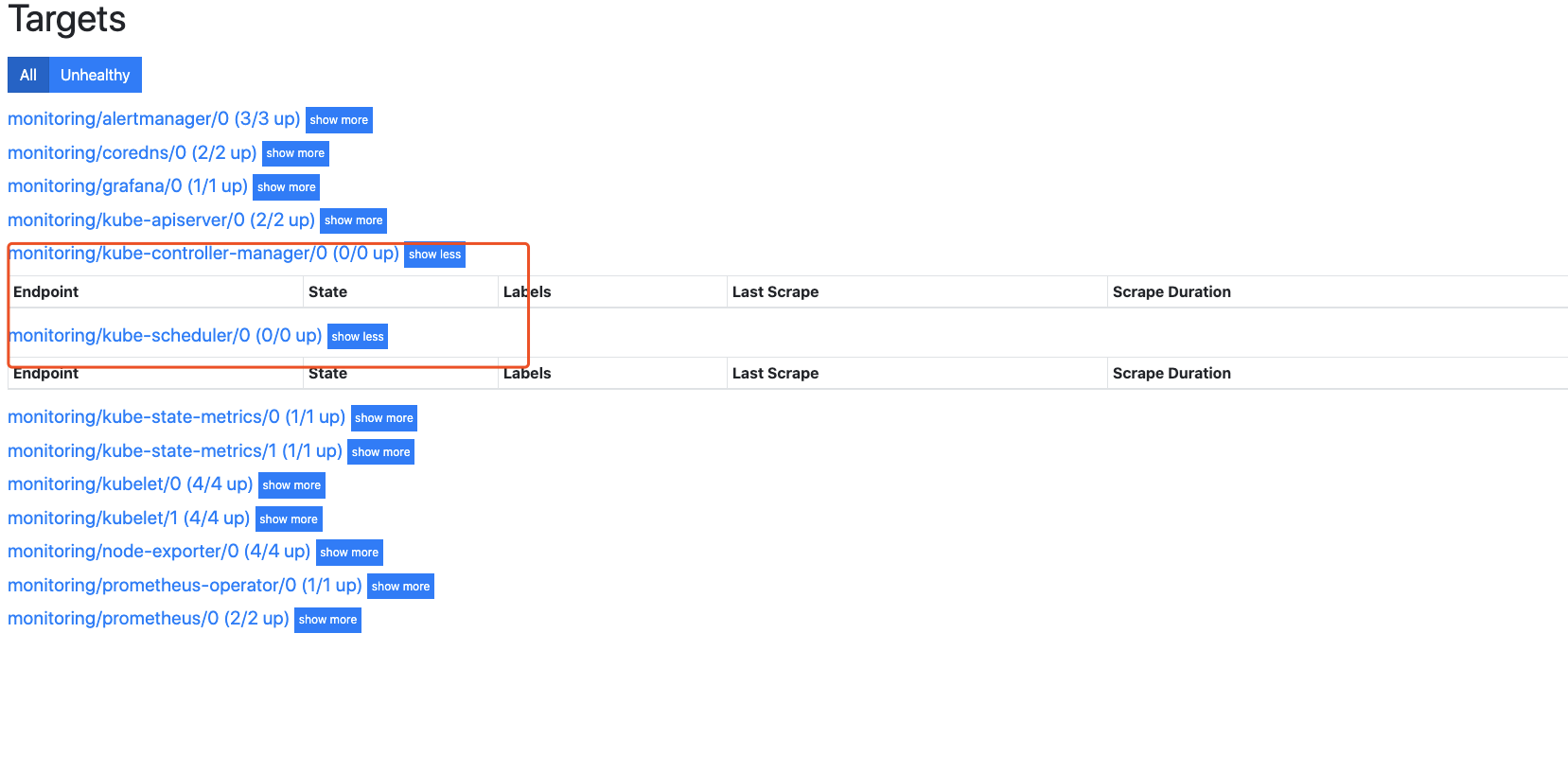

prometheus的页面上发现kube-controller-manager和kube-scheduler的target为0/0,如下图所示

解决办法

(注意:我的集群是二进制安装,kubeadm部署的集群处理方法有些不同,可以查看文章最后参考链接处理)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

|

$ vim controller-manager-scheduler-svc.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

k8s-app: kube-controller-manager

spec:

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10252

targetPort: 10252

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

k8s-app: kube-scheduler

spec:

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10251

targetPort: 10251

protocol: TCP

$ vim controller-manager-scheduler-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

subsets:

- addresses:

- ip: 172.16.77.86

- ip: 172.16.77.36

ports:

- name: http-metrics

port: 10252

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-scheduler

name: kube-scheduler

namespace: kube-system

subsets:

- addresses:

- ip: 172.16.77.86

- ip: 172.16.77.36

ports:

- name: http-metrics

port: 10251

protocol: TCP

$ kubectl apply -f controller-manager-scheduler-svc.yaml

$ kubectl apply -f controller-manager-scheduler-ep.yaml

|

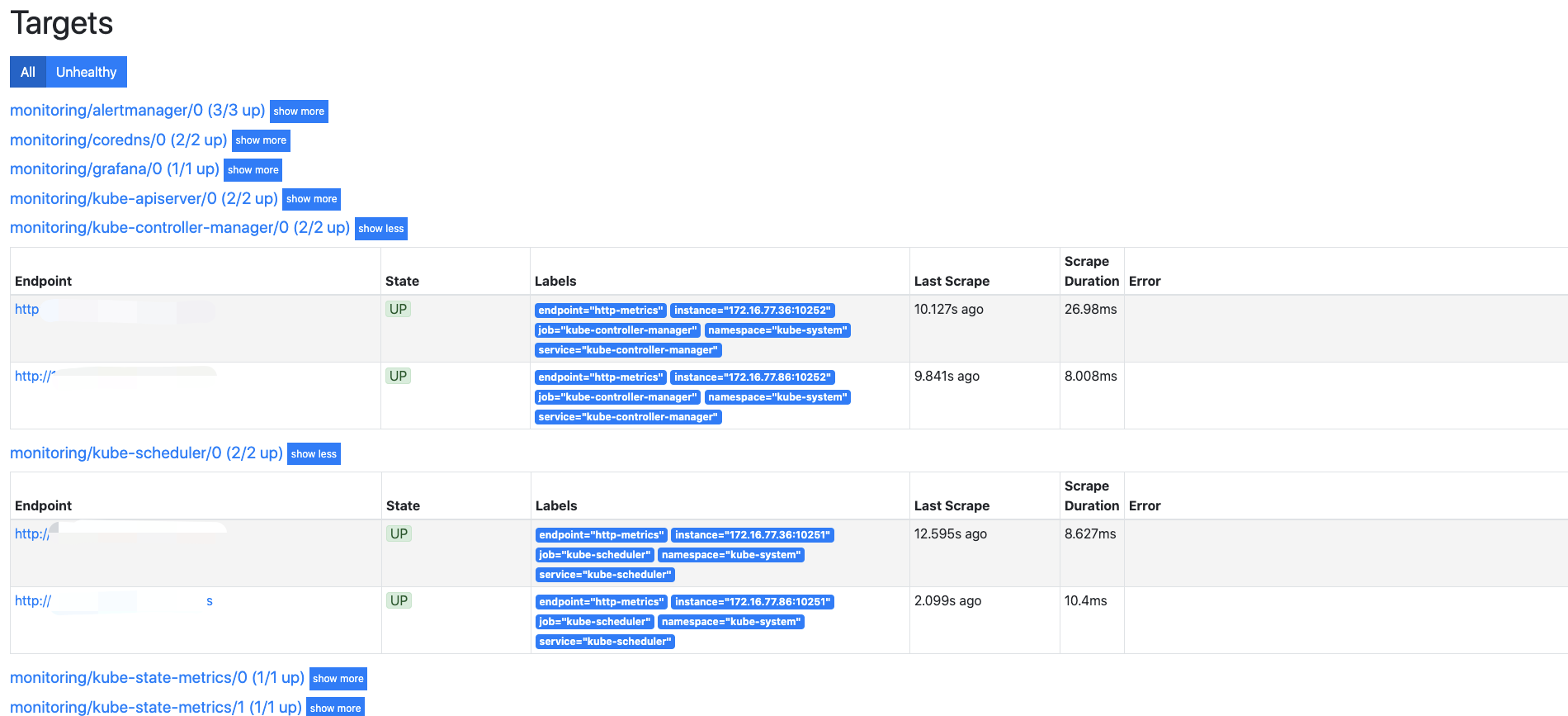

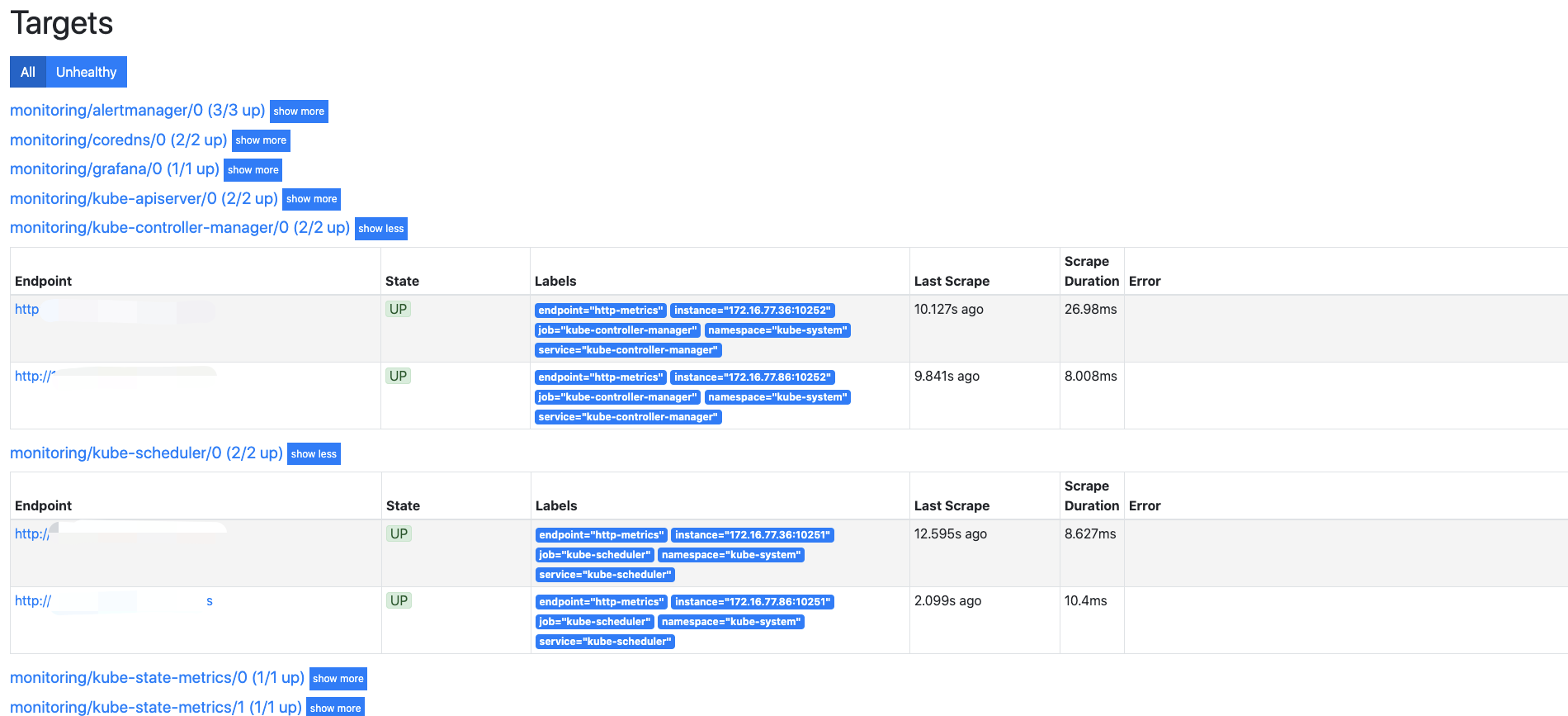

再次查看prometheus页面,显示就正常了

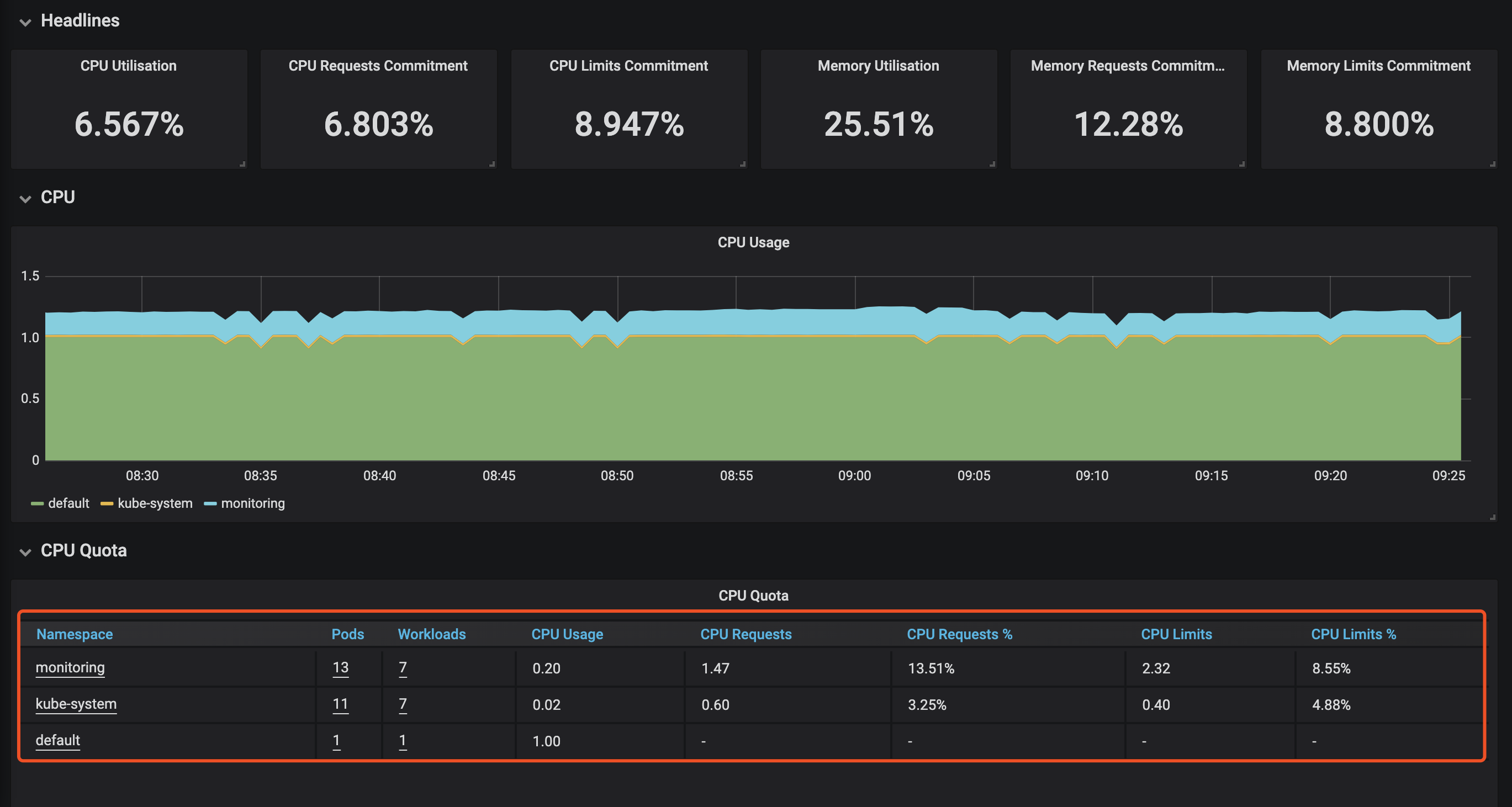

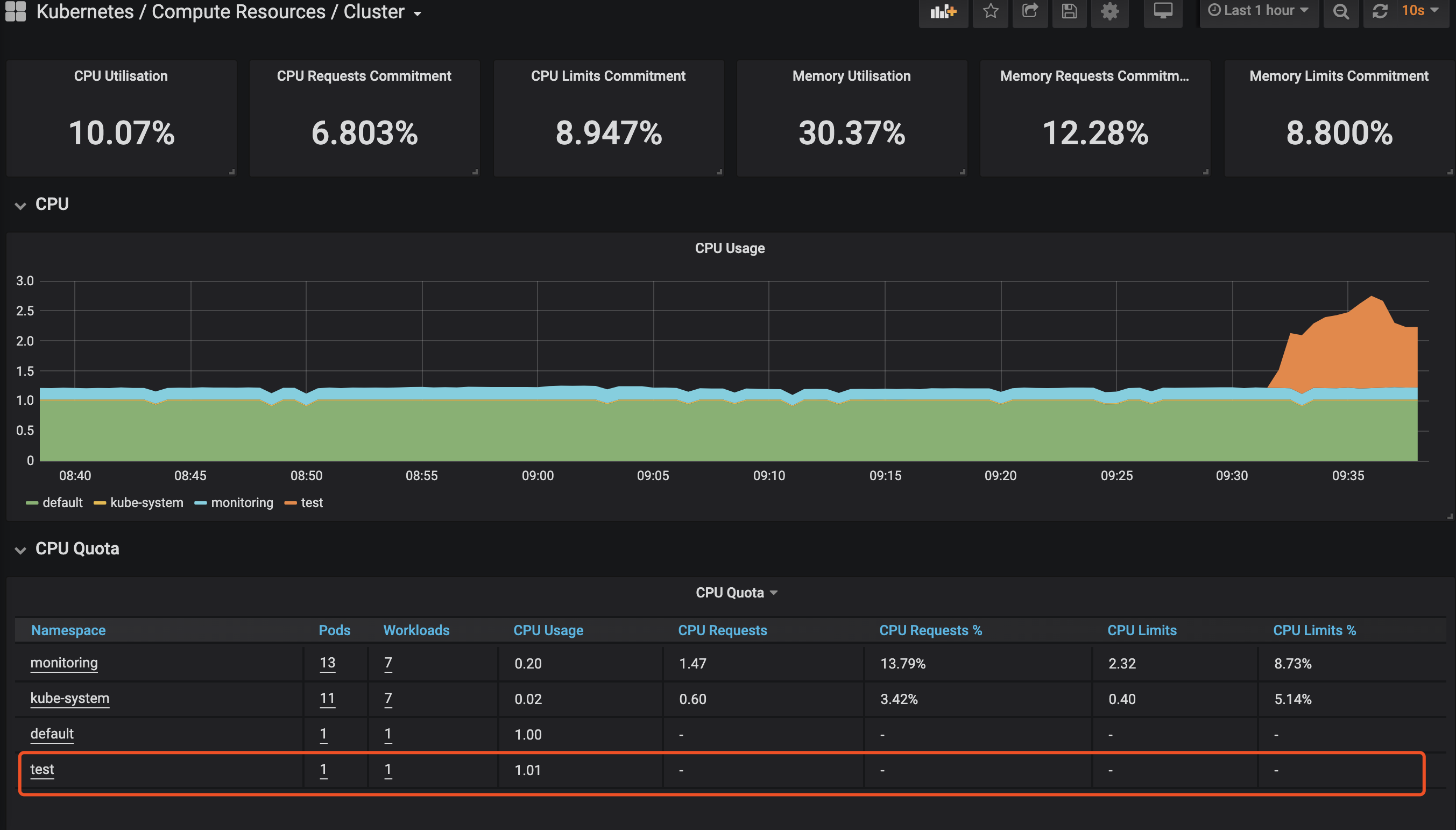

问题二

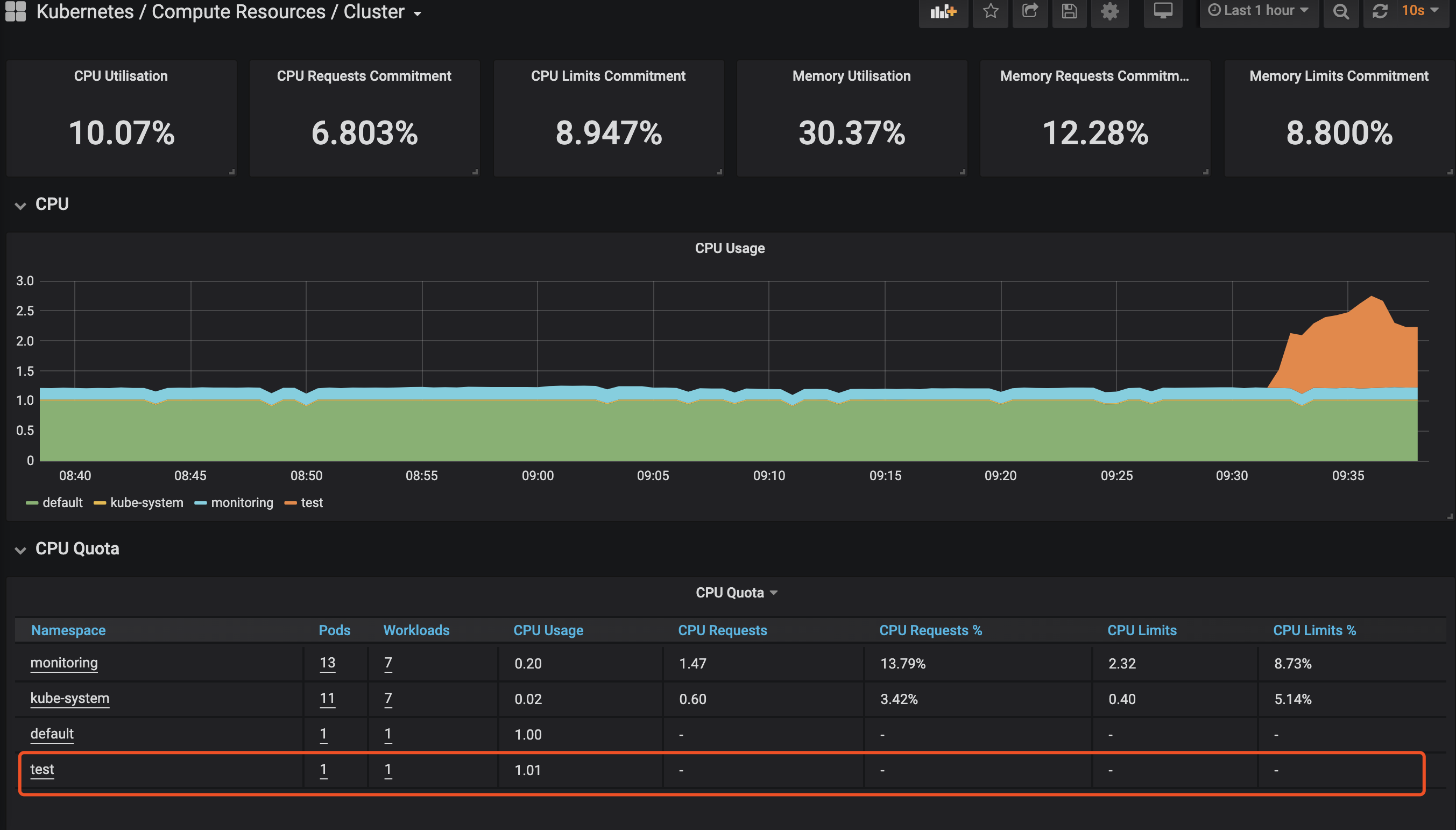

默认的serviceMonitor实际上就只能选三个namespacs,default、Kube-system和monitoring,可以查看一下prometheus-roleSpecificNamespaces.yaml,需要其他的ns自行创建role

- 示例——增加namespaces为test的serviceMonitor

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

# 创建namespaces、role

$ kubectl create ns test

$ vim ns-test-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: prometheus-k8s

namespace: test

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- pods

verbs:

- get

- list

- watch

$ kubectl apply -f ns-test-role.yaml

|

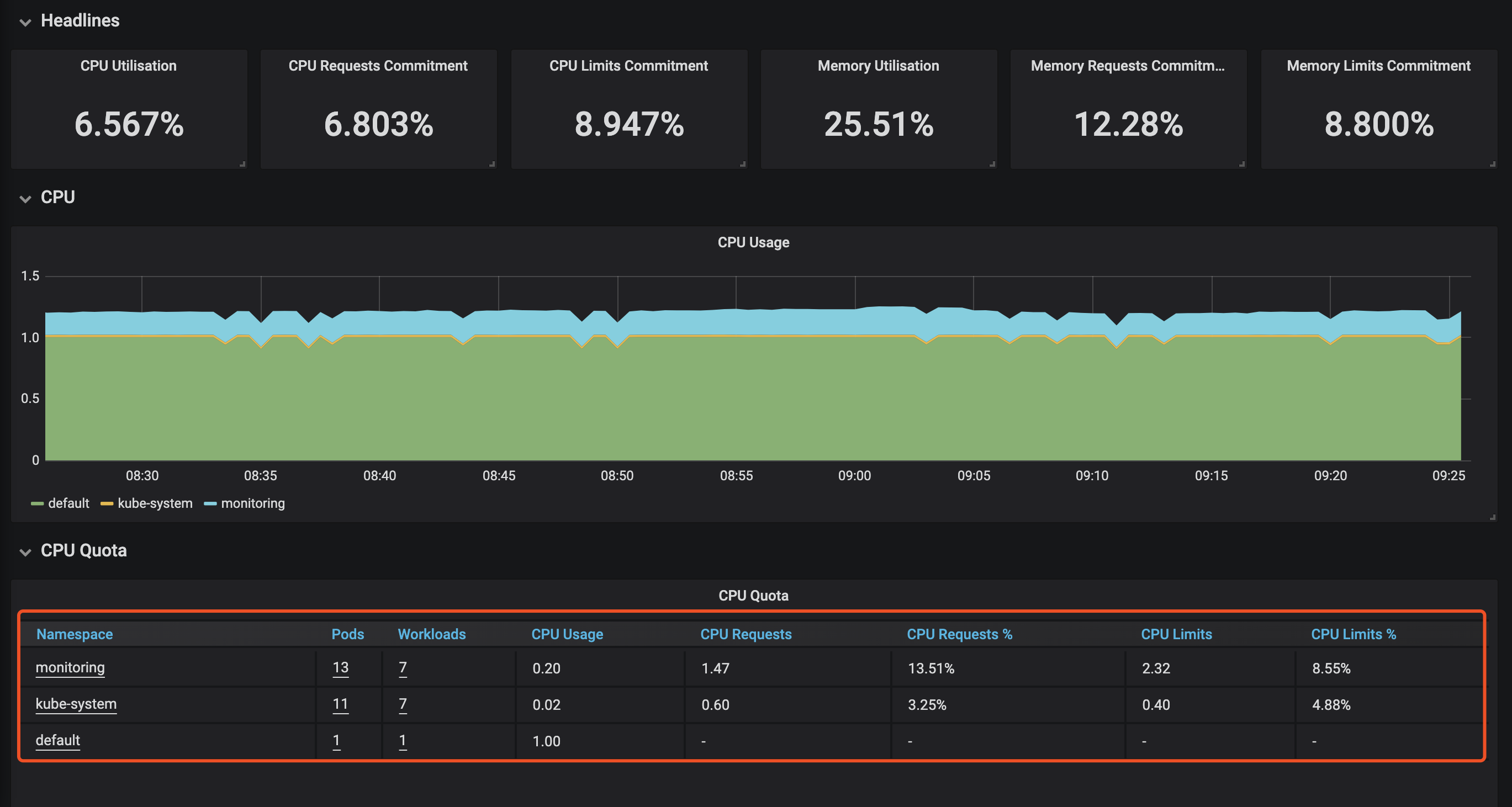

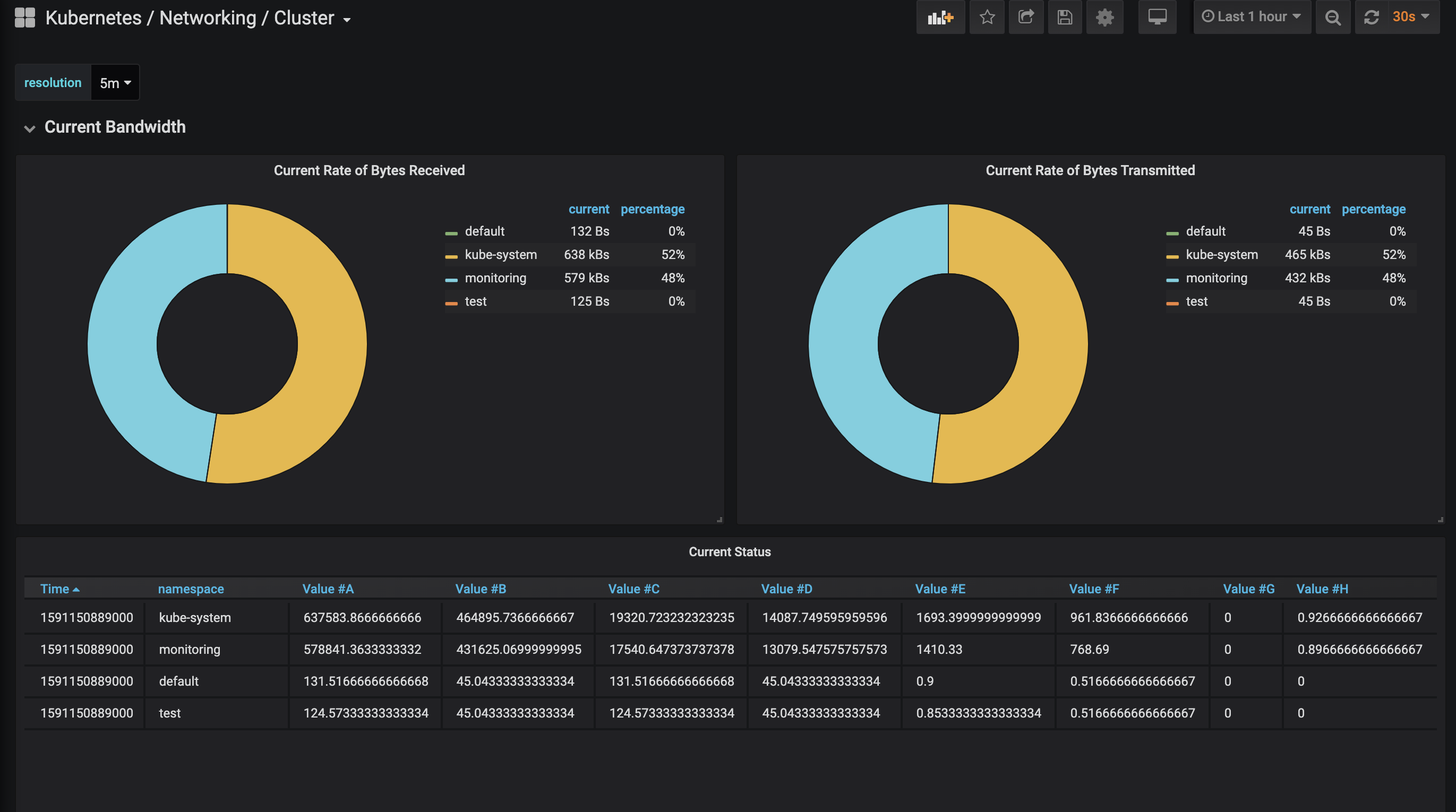

在test的命名空间创建个pod之后,查看grafana。可以看到已经有test命名空间了

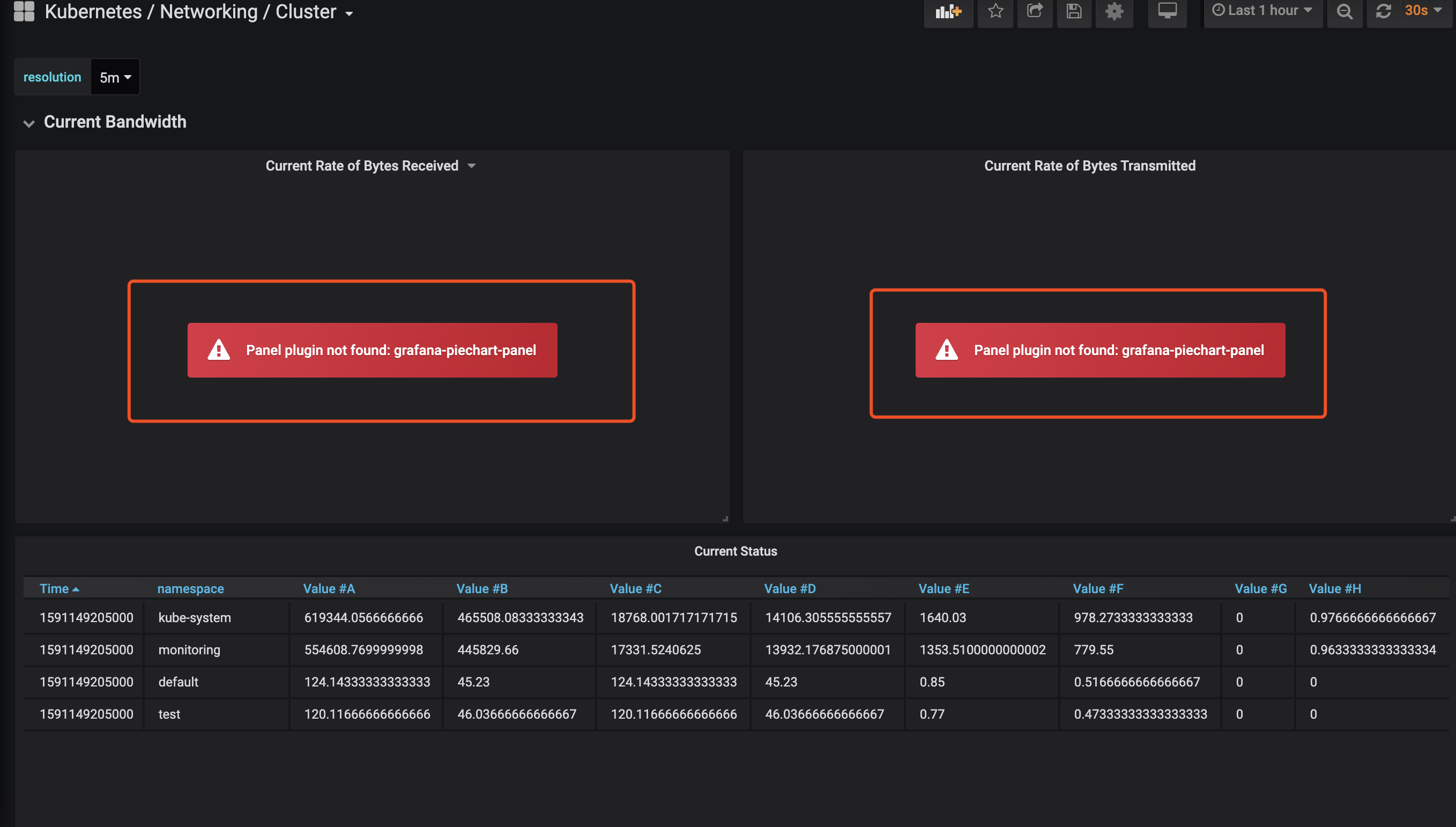

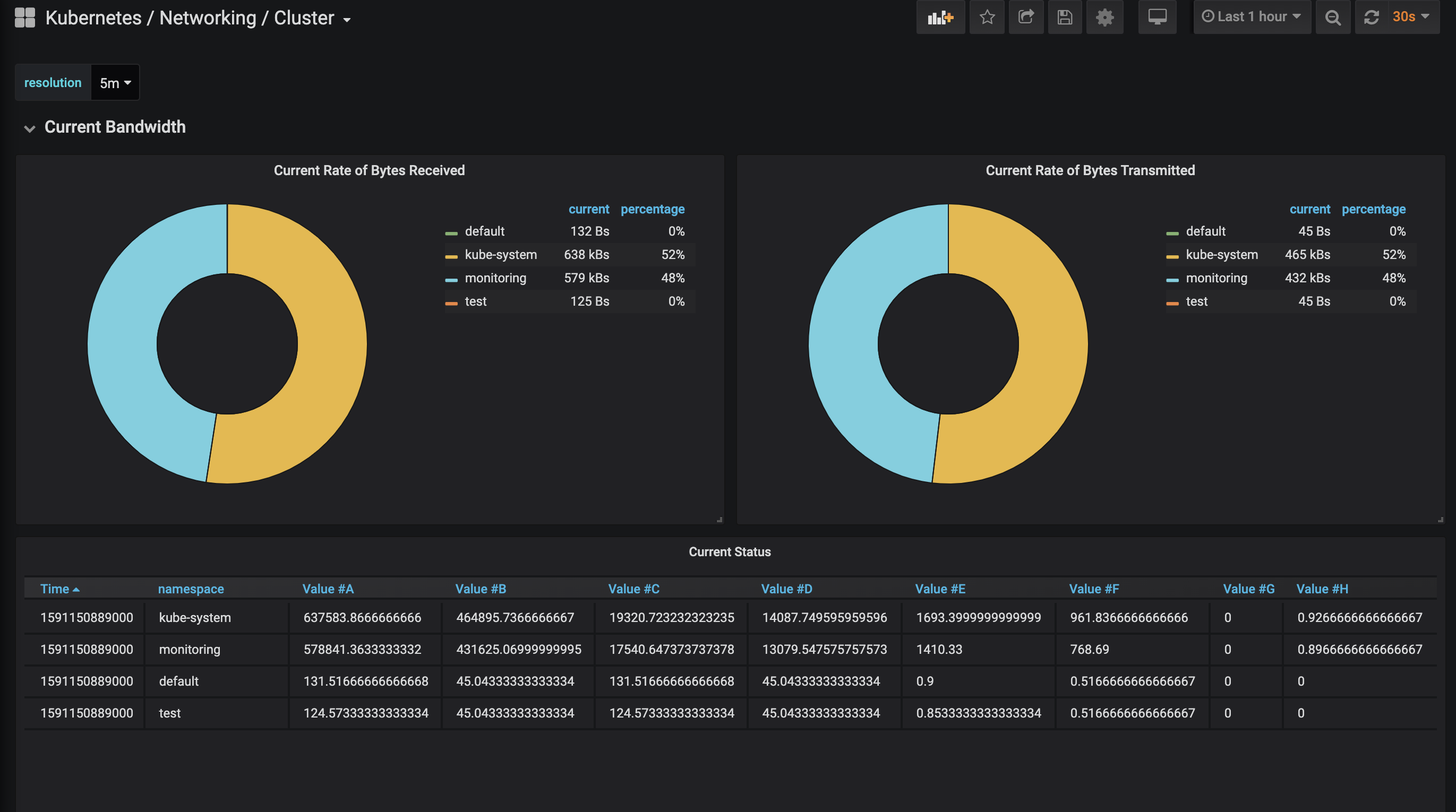

问题三

缺少饼形图插件grafana-piechart-panel

解决办法

方法一

在grafana的pod中,安装grafana-piechart-panel插件,然后去pod所在的node上用docker重启对应容器

注意:插件是安装到grafana数据库的目录,所以重启pod的前提是这个/var/lib/grafana目录持久化了。否则pod重置后还是会提示Panel plugin not found: grafana-piechart-panel

1

2

3

4

5

6

7

8

9

|

bash-5.0$ grafana-cli plugins install grafana-piechart-panel

installing grafana-piechart-panel @ 1.5.0

from: https://grafana.com/api/plugins/grafana-piechart-panel/versions/1.5.0/download

into: /var/lib/grafana/plugins

✔ Installed grafana-piechart-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

# 注意 虽然上面提示你service grafana-server restart 但是容器里是没有service命令的。要实现重启的目的 需要到该pod所在的node上去执行 docker restart <CONTAINER ID>

|

方法二

添加个initContainer,安装grafana-piechart-panel(目前使用办法)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

$ cd kube-prometheus-0.3.0/manifests

$ vim grafana-deployment.yaml

......

spec: #在spec下写入一下内容

initContainers:

- image: grafana/grafana:6.4.3

name: grafana-init

command:

- bash

- -c

- grafana-cli plugins install grafana-piechart-panel

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-storage

readOnly: false

......

$ kubectl apply -f grafana-deployment.yaml

|

查看grafana,看到已经正常了

参考链接

张馆长的博客

https://blog.csdn.net/twingao/article/details/105261641

https://blog.csdn.net/tangwei0928/article/details/99711322

https://github.com/coreos/kube-prometheus/issues/305

Author

dylan

LastMod

2020-08-02

License

如需转载请注明文章作者和出处。谢谢!